Query

For a new project, I’ve been watching movies about evil computers and university advanced-science communities.

My primary concerns are:

1. How are supercomputers built, and how do they operate?

2. How do the people who would build such a thing, in a university setting, behave?

As ever, I turn to my community of readers for help. Movies about evil supercomputers, and movies about university-level scientists. What should I watch? What should I read? I’m sure the science-fiction world is teeming with great novels on these topics, I just don’t know enough to track them down.

Thanks!

A) do you want / prefer movies on these topics? Or would you like some short story/novel suggestions as well?

B) how firm is the university interest? For example, Le Guin’s “Lathe of Heaven” (the novel and the two films) feature a medical doctor in an institutional setting–he’s built a fringe device (a dream altering computer) and no one will listen to him. To me, that seems like a prof seeking institutional recognition from the academy, but it’s not in a university.

C) I will think more on this (oh, Galatea 2.2 by Powers, novel about a comp sci prof building a computer that can read lit), but you might look at the SF Encyclopedia, now online: http://www.sf-encyclopedia.com/entry/computers

Ignore question (A), bad reading comprehension on iPad this time of night.

I’m reading Galatea 2.2 right now. It’s very much the world I’m thinking of.

Vernor Vinge, Rainbows End–there’s a lot of sf stuff going on here (possible AI, medical rejuvenation, driverless cars, augmented reality, bioweapons. But one of the main plots involves a university fight over digitizing books vs. leaving the old library.

Well, you should probably first know that movies and even sci-fi pretty much gets it wrong across the board.

A “supercomputer” is basically … take your desktop PC, right? Now put a thousand (or thousands) of them together in the same room (or have them distributed in more than one place.) The supercomputer is more or less a brute force approach to solving problems. Got something that would take a single PC like, a million years to do? Well put a million processors at it, maybe you’ll get your results in a year. OR you do the distributed route, like SETI at home did.

So you have your supercomputer. What do you do with it? Well, you book time on it. I want to use the supercomputer to chug away at this problem for four hours. Okay, awesome. We can give you four hours … in a month.

That’s a real world super computer. Super computers are not aware. Hell, NO computers are aware. Awareness is really, really hard. Right now, AI just tries to break down small problems. Like navigation. Or vision. Or you have stuff like “Openmind / Common sense” ( http://openmind.media.mit.edu/ ) which is basically compiling a bunch of facts to “teach” a computer system about the world.

So this massive computer system got built because of funding. The University itself probably didn’t fund it. Or if it did, it did so to help a variety of departments. Computer science, chemistry, physics. Lots of calculation intensive stuff. The Hard Science. But it might have gotten a state grant, an outside grant from some entity, alumni, etc.

How do they behave? Well, they fight a lot. Gonna be a lot of fight about scheduling. Or new hire procedures. Politics about who gets to use the thing. Fights between Computer Science (straight up computers) and the Informatics (computers applied, often to health science) because they both think they should be THE top dogs on campus when it comes to computers. And everyone fights so hard because the stakes are so small.

So that’s the real world, just to ground you a little. 🙂

Nice. This is good. Thanks!

Oh yeah, fights about scheduling. Who paid for the computer? Who has greater need? One researcher has a job that’s poorly-written and takes forever to run, but his department paid for it! Another researcher has some really interesting stuff to work on, and is happier to work with the sysadmins and software people, but has a lower priority than the people who paid for the system. The guys who paid for it demand the ability to kill other people’s compute jobs, and then go ahead and do so without warning.The sysadmins hear about it from both sides. The sysadmins keep giant bottles of antacids in their desks.

Yeah, I figured academic life is like that — a sandbox, just at a higher level.

And with more drinking.

My old boss had been a high-level systems administrator for 35 years when I worked with him. He kept a fifth of whiskey and a hatchet in his desk.

That’s great.

I’ve actually worked on supercomputers and built a couple– small ones, but still some decently powerful computing infrastructure.

The people who build them are techies and system adminstrators who are lucky enough to be working at an interesting job. You don’t build supercomputers because it’s just sort of a job– it’s a niche, and a really specialized niche at that. It takes a combination of technical skill (to know how to spec out hardware and know what to buy and how to install the software that runs it), political skill (to know how to get your university to fund your supercomputer, since cash-strapped schools might or might not see the point in dropping potentially millions of dollars in equipment and salaries on a giant compute system) and ambition– you have to want to see this thing working, and you have to want to take the time to tune it and optimize it for the tasks at hand. And weirdly enough, you need interpersonal skills– working with academics is tough, since they don’t always know what they want, or why, and they may like to drop buzzwords.

Off the top of my head, I can’t think of many novels or movies about supercomputer people that I don’t find annoyingly simplistic or simply insulting. The movie _Pi_ wasn’t bad– it captures the sort of obsession you need to pursue this esoteric field of study.

Greg Egan is a serious academic who writes interesting SF– his novel _Permutation City_, while not strictly about academics and supercomputing, is actually a good story about academics and simulated reality.

I’ll think about it some more and see if I can come up with more. I know there are other novels and things that didn’t annoy me. The problem with having technical knowledge, though, is that people who get it wrong are just so annoying… 8)

Ah yes, Pi, good call. I will check out Permutation City.

What are some of the buzzwords the academics will drop?

Academics usually want whatever is new and exciting or heavily-advertised and will aggressively demand it, which can be fun if they have the budget to back it up. More frustratingly, they’ll pick something arbitrary that they saw in a flyer or magazine or that some computer company salesman pushed on them which might or might not be appropriate for what they’re doing. So for example, around the time we decided to move to Linux on Intel systems, our biggest compute time users decided to buy themselves a bunch of Alpha systems, which were already pretty much abandoned by their maker. We wouldn’t have bought them these, but they found somebody else who would (a guy who agreed to pretty much everything they asked and was very popular as a result), bought them, and then demanded that we support them, which of course we had to do. I don’t know if that fits in there, but that kind of thing is pretty typical in academic environments… 8)

Things I ran into: “We’re getting Infiniband, which is nice, but is it 4x or 10x Infiniband? We should definitely use 10x.” (That will quadruple the cost of the whole system.) Or, let’s see. “Will you be using fiber channel storage or a clustered file system?” (…it depends on the application. We could do either.) “Will we be using OpenMP or MPI?” (It depends on the application.) (“It depends on the application” is almost always the answer.) Go to a conference web site, like this:

http://sc11.supercomputing.org/

…and look at what seems to be popular for an idea of what they’d demand.

So a super-basic supercomputing overview: You need to figure out whatever it is you’re trying to do and how tobreak it down into pieces. Each piece runs on a separate CPU. Some problems are difficult to break down, and some are very easy– these are called “embarassingly parallel,” which is a pretty great term. “Oh yeah, uh, it turned out to be embarassingly parallel.” You can probably get this level of info from wikipedia, but that’s such a great term it’s worth mentioning…

Alain says there’s nothing visual in another comment, and he’s mostly right– the actual design and work is for the most part a lot of people arguing. When it comes time for funding, though, you get into stuff like display walls and parallel visualization rigs, where you have a bunch of projectors and a separate computer (or multiple computers) generating the display on each one. This gives you the effect of a single giant screen the size of your wall. I’ve seen this done in smallish rooms with six monitors, and in larger rooms with a dozen projectors– it’s actually pretty striking. The really hip visualization clusters consist of an actual compute cluster (anywhere from 20 to thousands of computers) that do the actual computational work– simulating global weather systems, simulating chemical interactions of complex proteins, simulating biological systems (it’s not a coincidence that these are all simulations), or simulating other complex systems. The compute side feeds whatever it’s done to a second smaller cluster of systems that have high-end video cards and figure out how to render whatever it is you want to look at, and how to draw that on the screen. If you want, say, a wall-sized simulation of projected weather for the world for the next ten years, and to be able to roam around in it and look at individual pieces of it, this is how you’d do it.

Visualization clusters of that sort tend to be the sort of flagship project that gets funding– if you need to show off to non-technical management-level decision makers who aren’t going to appreciate that 140 teraflops is quite fast indeed, you build a display wall and show them some flashy stuff. I used to keep molecular rendering systems around for just this purpose… (It worked too, I’m embarrassed to admit.)

Oh, that’s another thing– there’s a main list of the fastest supercomputers in the world, and who runs them and how large they are. (Or technically, the fastest supercomputers that we _know_of_ and that are run by people willing to admit they own such things. There are always rumors of massive compute systems for monitoring communications and cracking encryption and other sinister purposes.) Here’s the list as of November:

http://www.top500.org/list/2011/11/100

A few years ago I did the math and figured that it would take about 2 million dollars just to crack the top 500 on that list. That seems to have stayed consistent since then

This is all terrific. Thanks!

Also, I have to say, thank you for asking. It’s frustrating to see computer stuff misrepresented or portrayed in ways that are just embarrassingly wrong in movies– even in details that, while they make no different to how the plot unfolds, could be done right with maybe 15 seconds of research. (Jurassic Park: “I know this– this is unix!” versus, say, Tron: Legacy and the recognizable and generally correct unix commands on the console. I love seeing stuff that makes it clear that whoever was making the movie cares about those details!)

I realize that the realities of actually making movies mean that stuff may still get to the screen that makes me roll my eyes, but at least you started off asking, and that means a lot. So, thanks.

The Jurassic Park thing is embarrassing. On the other hand, Tron:Legacy sucks.

Ironically, the weird interface in Jurassic Park was a real experimental interface that Silicon Graphics came up with (http://en.wikipedia.org/wiki/Fsn); I remember playing around with it once or twice on one of our department’s machines in grad school.

But, yeah, hardly typical of a Unix interface!

Brainstorm (1983) for university level scientists working in a big company.

Demon Seed (1977) for still more level scientists (but less convincing than in Brainstorm) working in a big company and giving birth to a supercomputer.

The classic stories of creating and dealing with a supercomputer are the Multivac short stories by Asimov. But that’s far in the past!

No movie or novel shows how supercomputers are really built these days because there’s nothing dramatic. Most of all, there is NOTHING visual. It’s all a series of bureaucratic meetings and discussions of algorithmic concepts. Basically, you build a supercomputer (hardware) and develop an operating system for it much in the same way you build a new, innovative series of big computers.

On the other hand there are the human aspects of doing this and they can be interesting even if there is little drama and zero graphic aspects.

The best books I’ve read on this were “The Soul of a New Machine” by Tracy Kidder and “Hackers: Heroes of the Computer Revolution” by Steve Levy

I think that it’s worth reading Kidder’s book even if it’s not in a university setting, because he describes university level scientists working together in a common environment. Parts of Levy’s books might be irrelevant for you but the ones dealing with the life of young scientists at MIT are crucial, I think.

I don’t play computer games at all, but as a science fiction maniac I stay aware of all computer games that have a science fiction aspect to them. I think you might want to take a close look at GLaDOS and the company that gave “birth” to her, Aperture Science. The Wikipedia entry on her is an awful mess but there is more all over the Web and you’ll get a nice summary of just how she’s a twisted version of HAL by listening to the words in her “song” in the credits at the end of the game:

http://www.youtube.com/watch?v=Y6ljFaKRTrI

P.S. I lied a little bit when I said that there are zero graphic aspects to building a supercomputer (or any large software project) . Over the last twenty years I’ve observed the gradual invasion of color in programming editors. Software scientists usually have a remarkable ability to perceive different shades of colors and they use this ability when composing software, alone or in a team. It’s all text and numbers, but man they’re incredibly colorful.

Oh yes, Brainstorm. Yes, good.

And yes, I’m a huge Portal fan. Aperture Science and GLaDOS have been a huge influence on me the past couple of years.

While slightly off-topic, I’d like to bring up one of my favourite movies of recent cinema, Moon.

There is so much to love about Moon, but of interest here is how they play on audiences preconceived notions of machine intelligences. GERTY was immediately associated to HAL9000 from the moment of his first appearance, both in action and design. I feel that the audience was gently but purposefully steered toward distrusting GERTY and thinking of him as the “evil supercomputer” in order to make his actions later in the movie… well… I don’t want to spoil anything, but let’s just say that I was impressed with how it was done.

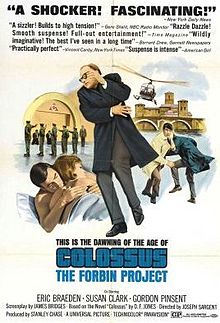

The trouble with any movie about a supercomputer, evil or not, is that we seem to innately distrust advanced technology, something I thought would lessen as our world becomes more and more immersed in high technology at an everyday level, yet it hasn’t. When Terminator, 2001, Colossus and Altered States were all made, “advanced technology” was still the stuff of movies (or Sony Walkmans). But today we have micro processors in our phones, iPads and even the kitchen sink that make Star Trek look like steampunk. Technology is unavoidably everywhere, without any evil uprising, yet as soon as a computer shows up in a movie it’s perceived as “evil” and “going to kill us all!” (With the exception of KITT. Everybody loves KITT).

But, back to the actual topic: Check out the second episode of the BBC series “Dirk Gently” (third episode if you include the Pilot in the count). It has a supercomputer built in a university, though, admittedly, it veers way off reality in favour of humour and mystery pretty quickly. It is based on a certain holistic detective created by the supercomputer mind of Douglas Adams, after all.

The audience was cued to distrusting GERTY because he was voiced by Kevin Spacey. No one trusts Kevin Spacey.

I haven’t seen Dirk Gently, but I’m a big Douglas Adams fan, I’ll have to check that out.

Well, rather typical of the BBC, the DVD’s hit a day or two after the third episode aired! So they should be easy to come by on Amazon or Play.com. The cast is fantastic, and the writing is very good, if not up to the stellar quality of BBC’s other detective series of late, Sherlock. The Dirk Gently pilot is a paired down version of the first book, while the three episodes of Season One are all new stories, all of which successfully retain the Big Idea of the Interconnectiveness of All Things at their core.

Can’t wait!

An artificial intelligence quote that I think sums up some of the questions about computers and intelligence and such: “asking if a computer can think is like asking if a submarine can swim”

The contemporary sections of Neal Stephenson’s “Cryptonomicon” really nails a lot of what computer research (and hacker culture) is like. It’s not quite supercomputers, but it’s folks building out new types of data center and computer infrastructure. Also, to get a good sense of how the actual underlying raw infrastructure happens, Stephenson’s article in wired from the mid 90’s about building out undersea cables is pretty awesome. (http://www.wired.com/wired/archive/4.12/ffglass.html) Sections of his latest, “REAMDE”, nail current high tech culture and practices pretty well.

It used to be that the image of a supercomputer is a single monolithic mainframe type system – Colossus in its sealed mountain vault. The rise of interconnected systems and high speed / high capacity networks that can shovel huge amounts of data around has things more in the model of “Fast, Cheap, and Out of Control.” There’s no Google mainframe, there’s no Microsoft mainframe. Instead of a giant computer taking up an entire room or building, the latest cutting edge is data centers are containerized ‘redneck data centers’ — you take a whole pile of cheap fast computers and stuff them into a shipping container with network switches and built-in cooling and such, and just truck it into place on a data center floor and hook up the main feeds for power and network. Then let it run and ignore it until enough components fail that it’s then worth swapping out and taking it back to repair it.

The hot new thing in computers these days is ‘virtualization’. Instead of a single physical server working as a single computer system with its own address and such, a server serves as a host to a bunch of ‘virtual’ systems that all run inside emulators so each system (“virtual machine” or “vm”) thinks it’s alone, and the host machine handles talking among them, interfacing with the world, and handling resource sharing. There are a bunch of cool advantages to this – because each virtual machine’s resource settings (amount of memory, for instance) is handled by the host machine, you can increase the memory on a virtual machine by just changing a configuration setting rather than having to open a case and stuff memory chips in.

So what ends up happening there is you have a big chassis that takes a dozen or so “blade” computers that slide in like books in a bookcase. Each blade is a single physical server that could have upwards of 300Gigs of memory (not a typo – high end blade servers are available that have more memory storage than my laptop’s hard drive storage) that serves as a host for dozens of virtual machines. The controlling system can handle moving virtual machines from one blade to another, so if one blade dies you can move the systems over to the rest of the blades. VMware is the industry leader for virtual machine stuff. Other notable candidates are Xen and VirtualBox, which are both open source rather than commercial.

Anyways, I digress.

So, yeah, my understanding is that for the most part, the shift is away from single mainframe supercomputers to massively parallel collaborative systems made up of smaller machines all teeming and swarming together.

Fascinating. And very useful. Thanks!

Speaking of Neal Stephenson, I loved his first novel, The Big U. His depiction of a university descending into barbarism is half-satirical, half-AWESOME. His narrator made interesting insights about universities persevering through incredible political changes. Take the University of Paris/Sorbonne: it weathered the Hundred Years’ War, the French Revolution, the Nazi occupation, et cetera, with only minor hiccups*. Stephenson has since disowned the novel as juvenilia, and it’s certainly dated, but hey, I still like it.

I can’t help you out with supercomputers but you may wish to research fire suppression systems. If there was a fire in a computer room, spraying down the equipment with water would be fatal to the computer, right? Instead of water, some companies protect their sensitive areas with carbon dioxide or halon gas to snuff out the flames. Much better for the equipment. Not so good for oxygen-breathing life forms that might be in the same room.

Thanks for picking up the blog again, Todd!

*oversimplification! but even so!

I’ve just watched a BBC Horizon documentary, The Hunt for AI (sorry, you may need to jump through some hoops to see that in the US) which included a couple of useful examples.

The presenter is shown around an IBM Blue Gene, a roomful of omninous black monoliths, which are really just fancy cupboards filled with racks of processor-nodes.

The cooling-system was very loud – the interview with a system-designer was done wearing earplugs!

That documentary also shows IBM’s Watson, which won a game of “Jeopardy!” against the show’s highest-earning human competitor.

(you can see a video that here

You might want to look at Grid Computing – that’s where lots of smaller computers can be combined to form a virtual supercomputer for a fraction of the price.

This takes the idea of the parallel-processing supercomputers mentioned so far, where you have many processor-nodes running small chunks of a larger task, and spreads that concept over a wider network.

Usually, a server farms out portions of a task out to the slave machines, which return the results once completed.

BOINC allows you donate spare processing-power on your home computer to various science-grids, usually when your screen-saver kicks in.

SETI@home is probably the best-known of those systems (at least it’s the first one I knew about) – that farms out chunks of radio-telescope data for signal-processing.

In Terminator 3, everyone’s favourite evil overlord supercomputer Skynet is shown to be a distributed system, thwarting any plans to just “pull the plug” – although it is spread surreptitiously like a Botnet, not really something a university team could get funding for 😉

I’d recommend reading Charles Stross’s recent near-future novel “Rule 34” – that actually includes a university computer-science professor who describes the AI system that his team developed, I reckon that may be directly applicable.

That system is described as being a distributed over volunteer’s phones, a handy source of cheap networked processing-power!

Charlie is very tech-savvy, so his computing concepts and projections usually go in plausible directions.

Wow, thanks. Who wouldn’t want to read a book called Rule 34?

Real world example of what a supercomputer is used for:

http://www.wired.com/threatlevel/2012/03/ff_nsadatacenter/all/1

For visual inspiration, there’s the Mare Nostrum supercomputer at the Polytechnic University of Catalonia, Barcelona, which was installed in a deconsecrated chapel:

http://gizmodo.com/293608/marenostrum-the-worlds-most-gorgeous-super+computer

More pictures here:

http://www.bsc.es/about-bsc/gallery

From what I can tell, it runs a lot of different kinds of simulations (but mostly in the molecular dynamics and materials science realms). Rather than researchers at a single university fighting for time, there’s a national-level system for submitting proposals (it reminds me a lot of how telescope time gets allocated for astronomers).

Any idea who paid for Mare Nostrum?

My impression is that it was a combination of the Spanish government and the provincial government of Catalonia (via their respective research funding agencies), and maybe the University as well.

I only had a minute or two to glance through this, so I apologize if I’m duplicating anything here. I’m working on a similar story for a web-comic series and I’ve found that setting up a Google News reader for IARPA (the Intelligence Advanced Research Projects Activity) works like a sort of tickler file. For example, I just saw this today: http://publicintelligence.net/meet-catalyst/ This organization is like DARPA but with a narrower focus on the things the NSA is interested in. It doesn’t get more evil and super-computerey than that. These are the government officials who funnel money into academic research and development departments and set up various Faustian Bargains with them.

Oh super. That’s great, thanks.

An strong, serious-minded science fiction novel with a university setting is TIMESCAPE by Gregory Benford:

http://en.wikipedia.org/wiki/Timescape

I know time-travel isn’t the focus of this, but PRIMER is one of the best films I’ve ever seen about showing tech people talk about what they do. I didn’t *understand* the technical talk, of course, but I certainly believed it.

Even further afield, the comic novel SMALL WORLD by David Lodge is a hilarious and accurate portrayal of academe – specifically, academic conferences.The second half of the book becomes a contemporary romance (in the old school, ‘epic’ sense of the word) as a young man literally circumnavigates the globe trying to catch up to his lady love, who’s attending conferences world-wide.

I’m a huge Primer fan, I think it’s the best movie, dollar for dollar, ever made. I also have no idea whatsoever what happens in Act III.

Thanks for the other recommendations!

re: what are people in high academia like, one of the fundamental character things happening with academic success is that all your flaws are forgiven if you perform in the prescribed way. this is one reason among a few others that you can end up with lunatics and narcissists in drastically more respectable positions than you would expect.

see for example, godel, but in everyday academia the examples are myriad, if less pronounced.

wonder if that html will go through.

for two examples, i knew one guy who would get so carried away early in his lectures (to 10~15 people; advanced, smaller classes) that from halfway through his point on he would yell at the top of his lungs, and numerous visible splatters of spittle would fly all over the students closest to him. i never once saw any of those MA and doctoral students express anything about it to him (i knowingly sat in the back).

another guy i knew kept a husband and wife doctoral student team with him at most times. he was the advisor of one or both of them. he was very clearly having an affair with the wife, and would shamelessly sort of hit on her around the husband, in what was pretty obviously a display for the husband. the same guy would sit in on other professors’ he didn’t like classes and ask irrelevant questions throughout, just to piss them off.